Advancing AI requires a full-stack approach, with a powerful foundation of computing infrastructure — including accelerated processors and networking technologies — connected to optimized compilers, algorithms and applications.

NVIDIA Research is innovating across this spectrum, supporting virtually every industry in the process. At this week’s International Conference on Learning Representations (ICLR), taking place April 24-28 in Singapore, more than 70 NVIDIA-authored papers introduce AI developments with applications in autonomous vehicles, healthcare, multimodal content creation, robotics and more.

“ICLR is one of the world’s most impactful AI conferences, where researchers introduce important technical innovations that move every industry forward,” said Bryan Catanzaro, vice president of applied deep learning research at NVIDIA. “The research we’re contributing this year aims to accelerate every level of the computing stack to amplify the impact and utility of AI across industries.”

Research That Tackles Real-World Challenges

Several NVIDIA-authored papers at ICLR cover groundbreaking work in multimodal generative AI and novel methods for AI training and synthetic data generation, including:

- Fugatto: The world’s most flexible audio generative AI model, Fugatto generates or transforms any mix of music, voices and sounds described with prompts using any combination of text and audio files. Other NVIDIA models at ICLR improve audio large language models (LLMs) to better understand speech.

- HAMSTER: This paper demonstrates that a hierarchical design for vision-language-action models can improve their ability to transfer knowledge from off-domain fine-tuning data — inexpensive data that doesn’t need to be collected on actual robot hardware — to improve a robot’s skills in testing scenarios.

- Hymba: This family of small language models uses a hybrid model architecture to create LLMs that blend the benefits of transformer models and state space models, enabling high-resolution recall, efficient context summarization and common-sense reasoning tasks. With its hybrid approach, Hymba improves throughput by 3x and reduces cache by almost 4x without sacrificing performance.

- LongVILA: This training pipeline enables efficient visual language model training and inference for long video understanding. Training AI models on long videos is compute and memory-intensive — so this paper introduces a system that efficiently parallelizes long video training and inference, with training scalability up to 2 million tokens on 256 GPUs. LongVILA achieves state-of-the-art performance across nine popular video benchmarks.

- LLaMaFlex: This paper introduces a new zero-shot generation technique to create a family of compressed LLMs based on one large model. The researchers found that LLaMaFlex can generate compressed models that are as accurate or better than state-of-the art pruned, flexible and trained-from-scratch models — a capability that could be applied to significantly reduce the cost of training model families compared to techniques like pruning and knowledge distillation.

- Proteina: This model can generate diverse and designable protein backbones, the framework that holds a protein together. It uses a transformer model architecture with up to 5x as many parameters as previous models.

- SRSA: This framework addresses the challenge of teaching robots new tasks using a preexisting skill library — so instead of learning from scratch, a robot can apply and adapt its existing skills to the new task. By developing a framework to predict which preexisting skill would be most relevant to a new task, the researchers were able to improve zero-shot success rates on unseen tasks by 19%.

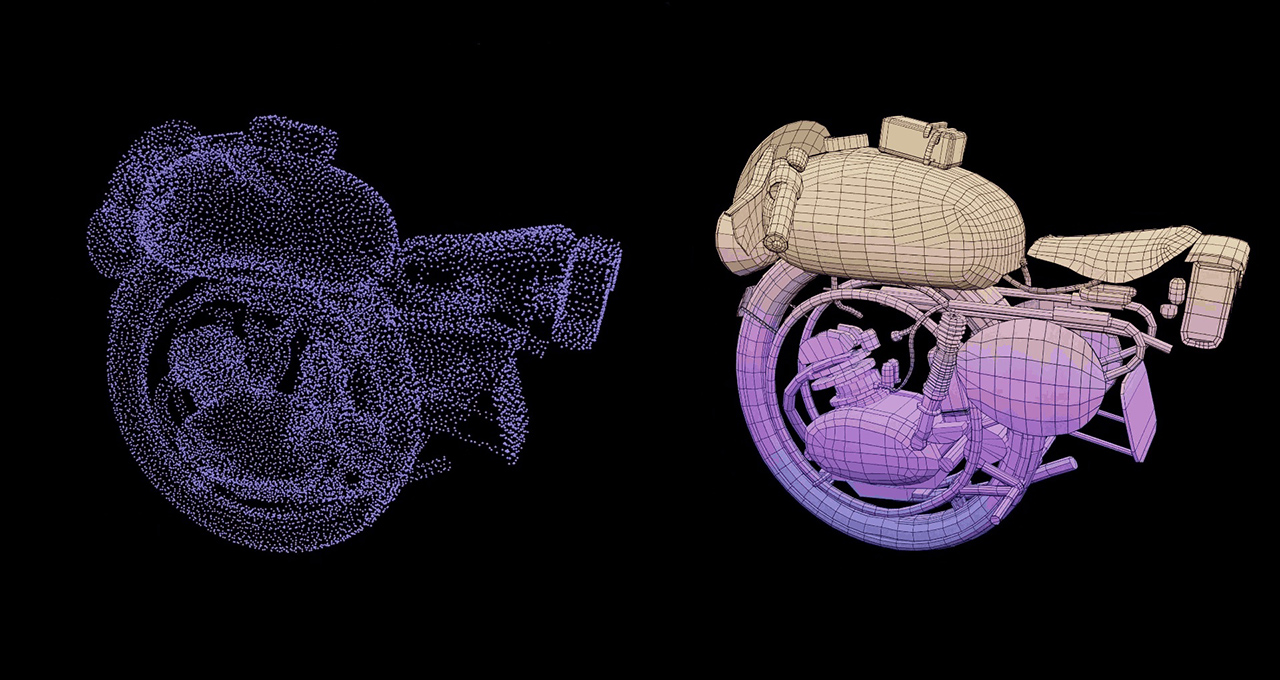

- STORM: This model can reconstruct dynamic outdoor scenes — like cars driving or trees swaying in the wind — with a precise 3D representation inferred from just a few snapshots. The model, which can reconstruct large-scale outdoor scenes in 200 milliseconds, has potential applications in autonomous vehicle development.

Discover the latest work from NVIDIA Research, a global team of around 400 experts in fields including computer architecture, generative AI, graphics, self-driving cars and robotics.